On Monday DARPA announced the next Grand Challenge. In this, the third challenge, the desert rats will have to survive in the big city: “Grand Challenge 2005 proved that autonomous ground vehicles can travel significant distances and reach their destination, just as you or I would drive from one city to the next. After the success of this event, we believe the robotics community is ready to tackle vehicle operation inside city limits.”

Some more details on what challenges the robots will or will not face are in the solicitation notice:

At the capstone event, performers will demonstrate a vehicle that exhibits the following behaviors: safe vehicle-following; operation with oncoming traffic; queueing at traffic signals; merging with moving traffic; left-turn across traffic; right-of-way and precedence observance at intersections; proper use of directional signals, brake lights, and reverse lights; passing of moving vehicles; and all the other behaviors implicit in the problem statement. Vehicles will also demonstrate navigation with limited waypoints and safe operation in complex areas such as parking lots. Recognition of traffic signals, street signs or pavement markings is considered outside the scope of the program, as this information will be furnished by DARPA. High-speed highway driving is also outside the scope of the program.

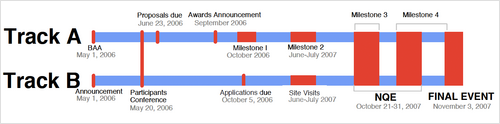

For this challenge DARPA is running two tracks: Track A seems to be the more rigorous track, with team progress tracked against detailed milestones. The bureaucracy appears to approach standard defense contractor levels, but the potential payout is $1,000,000. Track B seems to be about equivalent to the previous challenges in that teams have to supply a video of their vehicle in action and show it off during a site visit by DARPA officials to get in the race. Track B's max award is $100,000. More details are in the Proposer Information Pamphlet.

Both tracks will come together for the qualifying event on October 21, 2007, and will compete together on November 3, 2007:

If you want to compete, note that the participants conference is only about two weeks away!

I guess I'll look into modifying my DARPA GC forum scraper to generate RSS feeds of the new forum.

Posted by jjwiseman at May 03, 2006 01:24 PMI can't wait to see Terramax on city streets.

Posted by: geoff on May 3, 2006 03:24 PMtoo bad there's no "pedestrian avoidance" component. btw, I found a news reader that automatically extracts an RSS feed from any webpage, http://www.diffbot.com. No more writing scrapers!

Posted by: John on May 3, 2006 11:12 PMI would be surprised if something like diffbot is adequate for the task of scraping a big bulletin board-type site like DARPA's Grand Challenge forums.

Their forum has over a hundred separate pages arranged in a tree, with one page of discussion areas at the root, pages of topics below that, and pages of posts below that. You don't want to have to fetch 100 pages every time you go to update your feed.

Can diffbot use the tree structure to its advantage to avoid lots and lots of page requests? I would be pleasantly surprised if it could.

Can you do fancy stuff with HEAD requests and timestamps in HTTP headers to avoid retrieving the contents of every page? Maybe, if the software powering the bulletin board is sophisticated enough to send the appropriate headers with the appropriate values (I am not optimistic). In the best case you still have to do 100-200 HEAD requests.

My scraper can make one GET and figure out if anything new has been posted to the forum and decide whether it needs to make more requests. That's lightweight enough that I can do it every half hour, or even more frequently if I want.

I don't like having to write a specific scraper like this, but if DARPA won't upgrade their software to use RSS or Atom or something, I'm not sure there's any other way to get a feed.

Posted by: John Wiseman on June 27, 2007 10:41 AM