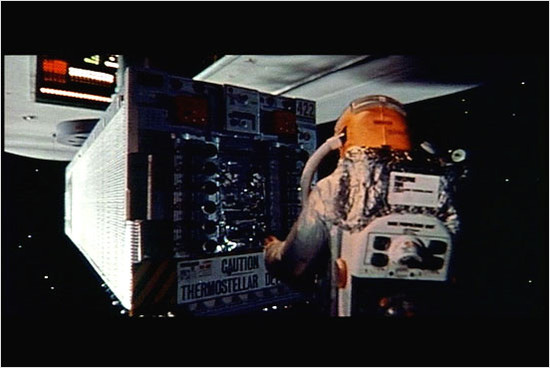

“Talk to the bomb. Teach it phenomenology.”

Ron Arkin's paper, “Governing Lethal Behavior: Embedding Ethics in a Hybrid Deliberative/Reactive Robot Architecture” is pretty fascinating (and long—I've only gotten through half of the 120 or so pages).

Abstract:

This article provides the basis, motivation, theory, and design recommendations for the implementation of an ethical control and reasoning system potentially suitable for constraining lethal actions in an autonomous robotic system so that they fall within the bounds prescribed by the Laws of War and Rules of Engagement. It is based upon extensions to existing deliberative/reactive autonomous robotic architectures, and includes recommendations for (1) post facto suppression of unethical behavior, (2) behavioral design that incorporates ethical constraints from the onset, (3) the use of affective functions as an adaptive component in the event of unethical action, and (4) a mechanism in support of identifying and advising operators regarding the ultimate responsibility for the deployment of such a system.

Why autonomous systems may act more ethically than human soldiers:

Fortunately for a variety of reasons, it may be anticipated, despite the current state of the art, that in the future autonomous robots may be able to perform better than humans under these conditions, for the following reasons:

- The ability to act conservatively: i.e., they do not need to protect themselves in cases of low certainty of target identification. UxVs do not need to have self-preservation as a foremost drive, if at all. They can be used in a self-sacrificing manner if needed and appropriate without reservation by a commanding officer,

- The eventual development and use of a broad range of robotic sensors better equipped for battlefield observations than humans’ currently possess.

- They can be designed without emotions that cloud their judgment or result in anger and frustration with ongoing battlefield events. In addition, “Fear and hysteria are always latent in combat, often real, and they press us toward fearful measures and criminal behavior” [Walzer 77, p. 251]. Autonomous agents need not suffer similarly.

- Avoidance of the human psychological problem of “scenario fulfillment” is possible, a factor believed partly contributing to the downing of an Iranian Airliner by the USS Vincennes in 1988 [Sagan 91]. This phenomena leads to distortion or neglect of contradictory information in stressful situations, where humans use new incoming information in ways that only fit their pre-existing belief patterns, a form of premature cognitive closure. Robots need not be vulnerable to such patterns of behavior.

- They can integrate more information from more sources far faster before responding with lethal force than a human possibly could in real-time. This can arise from multiple remote sensors and intelligence (including human) sources, as part of the Army’s network-centric warfare concept and the concurrent development of the Global Information Grid.

- When working in a team of combined human soldiers and autonomous systems, they have the potential capability of independently and objectively monitoring ethical behavior in the battlefield by all parties and reporting infractions that might be observed. This presence alone might possibly lead to a reduction in human ethical infractions.

It is not my belief that an unmanned system will be able to be perfectly ethical in the battlefield, but I am convinced that they can perform more ethically than human soldiers are capable of.

On the potential impact autonomous weapons systems can have on our will to go to war:

But responsibility is not the lone sore spot for the potential use of autonomous robots in the battlefield regarding Just War Theory. In a recent presentation [Asaro 07] noted that the use of autonomous robots in warfare is unethical due to their potential lowering of the threshold of entry to war, which is in contradiction of Jus ad Bellum. One can argue, however, that this is not a particular issue limited to autonomous robots, but is typical for the advent of any significant technological advance in weapons and tactics, and for that reason will not be considered here. Other counterarguments could involve the resulting human-robot battlefield asymmetry as having a deterrent effect regarding entry into conflict by the state not in possession of the technology, which then might be more likely to sue for a negotiated settlement instead of entering into war. In addition, the potential for live or recorded data and video from gruesome real-time front-line conflict, possibly being made available to the media to reach into the living rooms of our nation’s citizens, could lead to an even greater abhorrence of war by the general public rather than its acceptance. Quite different imagery, one could imagine, as compared to the relatively antiseptic stand-off precision high altitude bombings often seen in U.S. media outlets.

A vain attempt to head off some inevitable comments:

Posted by jjwiseman at February 16, 2008 04:22 PMI suppose a discussion of the ethical behavior of robots would be incomplete without some reference to [Asimov 50]’s “Three Laws of Robotics” (there are actually four [Asimov 85]). Needless to say, I am not alone in my belief that, while they are elegant in their simplicity and have served a useful fictional purpose by bringing to light a whole range of issues surrounding robot ethics and rights, they are at best a strawman to bootstrap the ethical debate and as such serve no useful practical purpose beyond their fictional roots. [AndersonS 07], from a philosophical perspective, similarly rejects them, arguing: “Asimov’s ‘Three Laws of Robotics’ are an unsatisfactory basis for Machine Ethics, regardless of the status of the machine”. With all due respect, I must concur.

I haven't read the paper yet and will comment again when I have. But the point of the Three Laws is that they encapsulate ethical human behavior. Indeed, there is no behavioral way to distinguish an Asenion robot from a human being who acts ethically.

Posted by: John Cowan on February 16, 2008 09:17 PMFirst, screw Asimov and his goofy, pedantic science fiction.

Meatwise, whose ethical system gets codified?

Why should I believe that *any* ethical system is of benefit to those who wish to robotocize their continuation of policy by other means?

This is wishful thinking at best or an intellectualized rationalization to assuage guilt at worst. I think the latter.

Zyklon B is an excellent delousing agent.

Maybe we can use autonomous killing machines ethically once humans begin behaving ethically.

Posted by: Will on February 27, 2008 08:18 AM