February 26, 2008

Protecting Journalistic Integrity Algorithmically

A couple years ago, when Reuters photographer Adnan Hajj got in trouble for some really bad Photoshopping, I fantasized about adapting Evolution Robotics' object recognition technology and using it to automatically screen news photos for suspicious alterations.

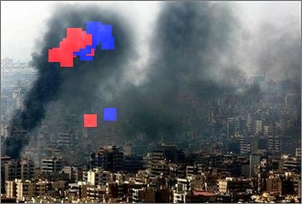

It turns out you don't need something as advanced as VIPR to detect simple cloning/stamping. John Graham-Cumming recently posted code to detect “copy-move forgery,” based on a paper by Jessica Fridrich, David Soukal and Jan Lukáš.

Copymove.zip contains Graham-Cumming's code, with a few modifications: I implemented the (big) speedup mentioned in this comment, and I changed it so the code only outputs the single final image containing all copied blocks, instead of multiple output images.

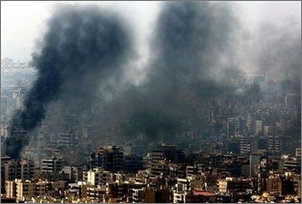

The algorithm is pretty good at detecting the alterations in Adnan Hajj's photos. On the left are Hajj's altered photos. On the right are the results of running the images through copymove.c, with red and blue squares showing the cloned sections.

The plume of smoke on the left side of the above photo was pretty obviously cloned, and caught by copymove. The plume on the right has some cloning too, and supposedly some buildings have been cloned.

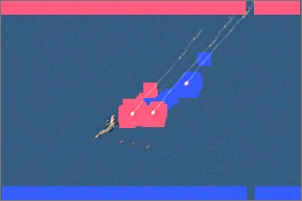

The jet in this photo dropped one flare, and Hajj copied it to create two more. The bands on the top and bottom might be an artifact of the image, or its processing, or the copymove algorithm.

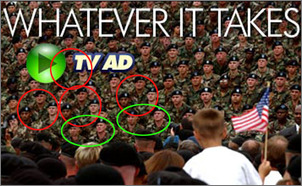

The image below is from a 2004 campaign ad for George Bush. “President Bush's campaign acknowledged Thursday that it had doctored a photograph used in a television commercial and said the ad will be re-edited and reshipped to TV stations.”

Copymove seems to have correctly identified the clones in the crowd.

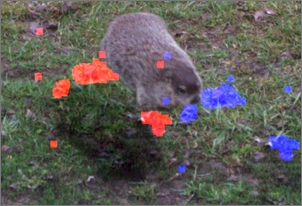

What about this magical hovering woodchuck?

Looks like another assault on photojournalistic integrity by someone with a stubby-little-legs-remover tool.

Unfortunately copymove isn't quite ready to be run on every AP and Reuters photo. The program takes two parameters, a “quality” (blurring) factor and a threshold. I used a quality of 10 and threshold of 20 for most of the images above, but those values don't work for all images. Some images (especially anything with a blurry background) are filled with false positives no matter what settings are used. And finally, I couldn't find an RSS or atom feed containing high quality news photos. (are there any?)

Later: I accidentally uploaded an old version of the code. I've updated the zip file with the right version.

February 16, 2008

Ethics in Lethal Robots

“Talk to the bomb. Teach it phenomenology.”

Ron Arkin's paper, “Governing Lethal Behavior: Embedding Ethics in a Hybrid Deliberative/Reactive Robot Architecture” is pretty fascinating (and long—I've only gotten through half of the 120 or so pages).

Abstract:

This article provides the basis, motivation, theory, and design recommendations for the implementation of an ethical control and reasoning system potentially suitable for constraining lethal actions in an autonomous robotic system so that they fall within the bounds prescribed by the Laws of War and Rules of Engagement. It is based upon extensions to existing deliberative/reactive autonomous robotic architectures, and includes recommendations for (1) post facto suppression of unethical behavior, (2) behavioral design that incorporates ethical constraints from the onset, (3) the use of affective functions as an adaptive component in the event of unethical action, and (4) a mechanism in support of identifying and advising operators regarding the ultimate responsibility for the deployment of such a system.

Why autonomous systems may act more ethically than human soldiers:

Fortunately for a variety of reasons, it may be anticipated, despite the current state of the art, that in the future autonomous robots may be able to perform better than humans under these conditions, for the following reasons:

- The ability to act conservatively: i.e., they do not need to protect themselves in cases of low certainty of target identification. UxVs do not need to have self-preservation as a foremost drive, if at all. They can be used in a self-sacrificing manner if needed and appropriate without reservation by a commanding officer,

- The eventual development and use of a broad range of robotic sensors better equipped for battlefield observations than humans’ currently possess.

- They can be designed without emotions that cloud their judgment or result in anger and frustration with ongoing battlefield events. In addition, “Fear and hysteria are always latent in combat, often real, and they press us toward fearful measures and criminal behavior” [Walzer 77, p. 251]. Autonomous agents need not suffer similarly.

- Avoidance of the human psychological problem of “scenario fulfillment” is possible, a factor believed partly contributing to the downing of an Iranian Airliner by the USS Vincennes in 1988 [Sagan 91]. This phenomena leads to distortion or neglect of contradictory information in stressful situations, where humans use new incoming information in ways that only fit their pre-existing belief patterns, a form of premature cognitive closure. Robots need not be vulnerable to such patterns of behavior.

- They can integrate more information from more sources far faster before responding with lethal force than a human possibly could in real-time. This can arise from multiple remote sensors and intelligence (including human) sources, as part of the Army’s network-centric warfare concept and the concurrent development of the Global Information Grid.

- When working in a team of combined human soldiers and autonomous systems, they have the potential capability of independently and objectively monitoring ethical behavior in the battlefield by all parties and reporting infractions that might be observed. This presence alone might possibly lead to a reduction in human ethical infractions.

It is not my belief that an unmanned system will be able to be perfectly ethical in the battlefield, but I am convinced that they can perform more ethically than human soldiers are capable of.

On the potential impact autonomous weapons systems can have on our will to go to war:

But responsibility is not the lone sore spot for the potential use of autonomous robots in the battlefield regarding Just War Theory. In a recent presentation [Asaro 07] noted that the use of autonomous robots in warfare is unethical due to their potential lowering of the threshold of entry to war, which is in contradiction of Jus ad Bellum. One can argue, however, that this is not a particular issue limited to autonomous robots, but is typical for the advent of any significant technological advance in weapons and tactics, and for that reason will not be considered here. Other counterarguments could involve the resulting human-robot battlefield asymmetry as having a deterrent effect regarding entry into conflict by the state not in possession of the technology, which then might be more likely to sue for a negotiated settlement instead of entering into war. In addition, the potential for live or recorded data and video from gruesome real-time front-line conflict, possibly being made available to the media to reach into the living rooms of our nation’s citizens, could lead to an even greater abhorrence of war by the general public rather than its acceptance. Quite different imagery, one could imagine, as compared to the relatively antiseptic stand-off precision high altitude bombings often seen in U.S. media outlets.

A vain attempt to head off some inevitable comments:

I suppose a discussion of the ethical behavior of robots would be incomplete without some reference to [Asimov 50]’s “Three Laws of Robotics” (there are actually four [Asimov 85]). Needless to say, I am not alone in my belief that, while they are elegant in their simplicity and have served a useful fictional purpose by bringing to light a whole range of issues surrounding robot ethics and rights, they are at best a strawman to bootstrap the ethical debate and as such serve no useful practical purpose beyond their fictional roots. [AndersonS 07], from a philosophical perspective, similarly rejects them, arguing: “Asimov’s ‘Three Laws of Robotics’ are an unsatisfactory basis for Machine Ethics, regardless of the status of the machine”. With all due respect, I must concur.

February 13, 2008

Screedbot

Screedbot, “the animated scrolling typewriter text generator,” is Zach Beane's new lisp-powered web toy. Perfect for presenting your manifestos in online discussion forums with style (unless you want Cyrillic text).

February 08, 2008

February CRACLing

ASTHMA VAPINEZE sign on Fairfax, “LA's greatest secret”, via askmefi.

CRACL's next meeting is on Sunday, Feb. 17 at 6 PM.

Royal Clayton’s Pub in the downtown Art's District is the venue. We will convene around the pool table @ 6 pm. This will help keep our group contained to facilitate “happy hour” prices throughout the evening. There is ample seating in this area.

Please look for the tell tale copy of a LISP programming manual on a table.

February 07, 2008

Arc Experiments

David Nichols' entombed Arduino.

Will experiments with Arc, and discusses the good and the bad (and note that Hacker News now seems to handle Unicode, with help from Patrick Collison).

0b5e55ed

Kragen categorizes words that can (kinda) be spelled in a 32 bit hex number according to potential use inside a software system [via est].

Things lacking some usual feature: ba5e1e55, face1e55, deaf, 5eed1e55,

5e1f1e55, ba1d

Recursion: ca5cade5

Graphical things: d00d1e, ea5e1, 5ca1ed

Subroutines: c0de, ca11ab1e, d0ab1e

Activation records: ca11ed

Encoding: c0ded, dec0ded

Ownership: deeded, 1ea5ed, ceded

Security and denials of service: 5afe, f100ded, 5ea1ed, f0e

Databases: DB

OO terms: facade, c1a55, 5e1f, ba5e

Large binary objects: b10b

Higher-order programming: f01d

Deception: f001ed

Compacting garbage collectors: c0a1e5ce

Ian Piumarta's design: c01a

Dates or base-10 numbers: decade

Things that are very determined or fault-tolerant: 0b5e55ed

Information leakage: b1abbed, b1ed, b1eed

Strings: babb1e, ba11ad

Things that don't make sense: Dada, baff1ed

February 06, 2008

arduino_serial

It's Mars.

arduino_serial.py is a Python port of Tod E. Kurt's arduino-serial.c program for communicating with an Arduino microcontroller board over a serial port. It only uses standard Python modules (notably termios and fcntl) and does not require any special serial communications modules.

Like Tod's program, you can use it from the command line.

Send the string “a5050” to Arduino:

$ ./arduino_serial.py -b 19200 -p /dev/tty.usbserial-A50018fz -s a5050

This would cause the pan-tilt head described in this previous post to return to its middle position.

Recieve a line of text from Arduino, wait 1000 milliseconds, then send the string “a0000”:

$ ./arduino_serial.py -b 19200 -p /dev/tty.usbserial-A50018fz -r -d 1000 -s a0000

Complete command line usage information:

Usage: arduino-serial.py -p <serialport> [OPTIONS]

Options:

-h, --help Print this help message

-p, --port=serialport Serial port Arduino is on

-b, --baud=baudrate Baudrate (bps) of Arduino

-s, --send=data Send data to Arduino

-r, --receive Receive data from Arduino & print it out

-n --num=num Send a number as a single byte

-d --delay=millis Delay for specified milliseconds

Note: Order is important. Set '-b' before doing '-p'.

Used to make series of actions: '-d 2000 -s hello -d 100 -r'

means 'wait 2secs, send 'hello', wait 100msec, get reply'

You can also import arduino_serial and use its SerialPort class to communicate with an Arduino from a Python program.

import arduino_serial

arduino = arduino_serial.SerialPort('/dev/ttyUSB0', 19200)

print arduino.read_until('\n')

arduino.write('a5050')

February 01, 2008

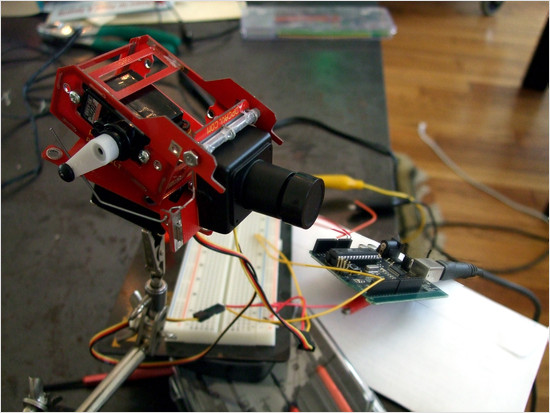

TILT

For UAV purposes I bought a kit for a tiny little pan/tilt head and a tiny little video camera and a couple tiny little servo motors. Before I completely installed the motors into the pan/tilt head I wanted to test them, so I found some servo code for my Arduino board and wired it all up. The motors worked. But the whine of those little servos is addictive and I couldn't stop there.

So I fancied up the Arduino code to take commands from the serial port. Then I wrote some Python code to read the tilt sensors in my laptop and send commands to the Arduino. Here's the result:

Reading the Mac's Sudden Motion Sensor was kind of a pain. Neither Amit Singh's AMSTracker nor pyapplesms read my Macbook Pro's Y-axis value correctly. Fortunately Daniel Griscom's SMSLib worked perfectly.

My Python code runs the smsutil program from SMSLib (patched to flush its output after each line; I couldn't figure out how to get the buffering to work correctly otherwise), then uses Tod Kurt's arduino-serial program to send commands to the Arduino (because when I opened the serial port directly in Python the program would hang).

It's all kind of hacky because I was in a hurry, but it works.

Arduino code: pandora_pan_tilt.pde.

Python code: sms_servo.py.

Update: I had some problems with the serial comms getting out of sync, so modified the Arduino code and the Python to make it a little more robust.