July 27, 2007

Automatic Rumor

Chris Csikszentmihályi has an article about the use of military robots in the Winter 2007 issue of Bidoun. I've scanned it into a PDF (automatic-rumor.pdf), and you can read the OCRed text below.

AUTOMATIC RUMOR

Networks of images, lives, and deaths

By Chris Csikszentmihályi

“In February 2002, a Predator tracked and killed a tall man in flowing robes along the Pakistan-Afghanistan border. The CIA believed it was firing at Bin Laden, but the victim turned out to be someone else.”

—The Los Angeles Times, January 24, 2006

“American government officials said one of the people in the group was tall and was being treated with deference by those around him. That gave rise to speculation that the attack might have been directed at Osama Bin Laden, who is 6-feet-4.”

—The New York Times, February 17, 2002

Is the United States military trained to interpret images? Have Predator pilots read Sontag, Svetlana Alpers, CS Pierce? As the decision to kill is increasingly based on images relayed from autonomous drones, one can't help but ponder what kind of training the military receives in interpreting visual media.

War by robot proxy: America is building a world in which its citizens won't ever actually have to go to battle. Policies and associated technologies are engineered to prevent Americans from experiencing war firsthand. Though journalists once acted as civilian proxies, something changed with the war in Vietnam, when the military began to view domestic opposition to war as a kind of enemy at home, and so through exclusion, intimidation, and fastidious embedding, has successfully kept them far from the realities of the field.

In fact, soldiers themselves are increasingly replaced by prosthetic technologies; remotely operated machines are playing the roles once played by humans. The Department of Defense is projected to spend nearly $3 billion in 2008 alone for Unmanned Aerial Vehicles such as the Predator, a spindly, medium-altitude, long-range aircraft developed after the first Persian Gulf War.

These relatively inexpensive ($4 million) airplanes, usually piloted remotely from halfway around the world, can stay airborn for an entire day at a time. Originally marketed as surveillance devices, they were first used for targeted assassination purposes in Yemen, in 2002. To add to their formidable entourage, the US military most recently purchased SWORDs, 80-lb robots that can be outfitted with machine guns and grenade and rocket launchers. A human soldier can operate the robot from several thousand feet away, simply by watching a video transmission from the robot and controlling it remotely.

While these systems currently require human involvement—watching a blinking screen of pulsating pixels, making choices based on split-second interpretations—increasingly, autonomous software will be used to make even the biggest of decisions. Says Colonel Tom Ehrhard of the US Air Force, “Flight automation is riding the wave of Moore's law, and it gets increasingly sophisticated. Automation will make it so that even adaptations in flight in combat will be made independently of human input.” In the Pentagon's vision of the future, it will be software engineers who decide who gets to live or die, preserving their ethical choices in code that's executed later.

Images taken from a Predator's camera are circulated within a network of soldiers, spies, analysts, and pilots. In the few telemetry videos from Predators that have made their way to the internet (most often onto YouTube, probably leaked by the military itself), a half-dozen voices are overheard in urgent teleconferenced debates on what they are seeing, how to respond, when to fire, and at what. Sequestered air-conditioned workspaces are the new front, where the cubicle meets the cockpit.

In February 2002, the image of Daraz Khan, 5'11", walking near Khost, Afghanistan, looking for scrap metal to sell, wended its way up 15,000 feet to a Predator; was sucked into a massive lens on a computer-stabilized gimbal; was projected onto a Forward Looking Infra Red near-field focal plane array; was piped through an analog-to-digital converter, through microcontrollers and computers and then a spread-spectrum modem, up to a satellite, then down to ground station computers, perhaps in Virginia or Germany; and was finally displayed on a flat-panel monitor studied by human eyes.

We have little reference for understanding images of this nature; they are like a documentary, in that they offer a view, with implicit and explicit perspective, of a nonfiction event. They are also like computer games, in that the viewer is meant to interfere in that event, engage with it. But drone-generated video is unlike documentary or video games—or indeed any other visual media in that the decisions made based upon them are both immediate and, increasingly, fatal.

These newly mediated images of war are like old ones in that they are hermeneutic. Freshman art history students are taught the interpretability of images, and by the time they're seniors, they'll have learned that photographic evidence is only as credible as the host of experts called in to explain it. By the time they're seniors, they'll know that for decades after its invention, photography was leveraged to prove the existence of ghosts. In 1869 a Boston photographer was taken to court for selling ghost photographs. Experts were called in on both sides, though the judge dismissed the case altogether for lack of evidence. Dozens of internet sites, to this day, give tips on how to take (digital!) images of ghosts. Why is it, one might ask, that only humanists are trained in this history, never engineers or soldiers?

One particular cockpit video that swept the web in 2004 portrayed the obliteration of a large group of men in Fallujah, an obliteration based on no apparent information other than that they were men, and walking out of a mosque. Do the millions of dollars of imaging equipment used to make such film present a neutral image? Is the suspicion inherent in the taking of these images not transmitted through the apparatus to the viewer? The novelist Max Frisch once said, “Technology is the knack of so arranging the world that we don't have to experience it.” After seeing the real-time video of the Fallujah bomb destroying a score of anonymous pedestrians, the overwhelmed weapons operator simply sighed, “Oh, dude.” He was flying blind.

Previous pages:

http://video.google.com/videoplay?docid=-6117620228557384079&q=oh+dude%2C+fallujah

http://video.google.com/videoplay?docid=-6462134772429695507&q=video+shooting+iraq

http://www.liveleak.com/view?i=c798023325

http://video.google.com/videoplay?docid=-7798905029323266678&q=predator+iraq

July 24, 2007

Several Cheap UAVs

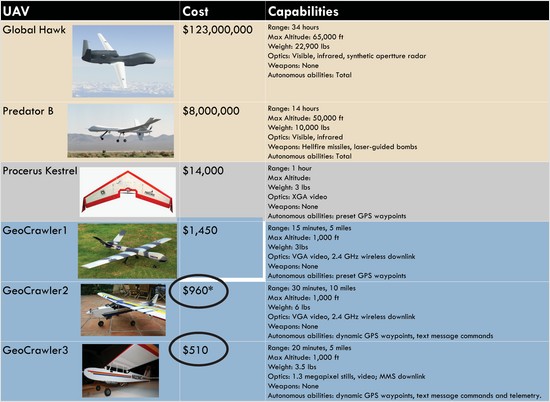

Chris Anderson now has several sub-$1K UAV designs.

Geocrawler 2 is the Lego Mindstorms NXT-based UAV (though by my calculations it's closer to $1500). I don't think the software is quite ready for any of them yet.

From his Geocrawler 2 post:

(There is another aim of this project, which is more about policy. At the moment the FAA regulations on UAVs are ambiguous (we believe that by staying below 400 feet and within line-of-sight we're within them). But there is a good deal of concern that as small and cheap UAVs become more common, the FAA will toughen the rules, making activities such as ours illegal without explicit approval. I hope this project will illustrate why that approach won't work.

By creating a UAV with Lego parts and built in part by kids, we haven't just created a "minimum UAV", we've created a reductio ad absurdum one. If children can make UAVs out of toys, the genie is out of the bottle. Clear use guidelines (such as staying below 400 feet and away from tall buildings) would be welcome, but blanket bans or requirements for explicit FAA approval for each launch will be too hard to enforce. The day when there was a limited "UAV industry" that could be regulated are gone.)

Yeah.

July 22, 2007

Global Synchronization Through Local Effects

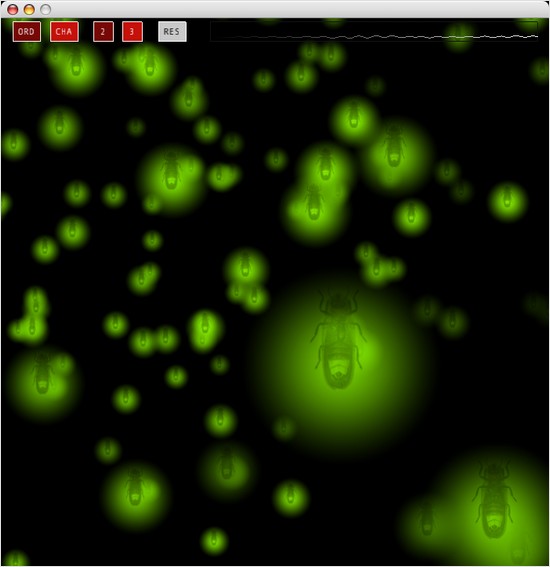

After seeing Alexander Weber's microcontroller-simulated fireflies, and reading “Firefly Synchronization in Ad Hoc Networks”, I decided I was ready to learn Processing.

You can try the applet here. Let it run for at least several minutes.

The idea is that when one firefly sees another nearby firefly flash, it hurries up and flashes a little bit sooner than it would have otherwise. Pretty simple.

I didn't realize that lightning bugs even tried to synchronize their flashes, but this applet does remind me of some of the patterns I would see as a kid on midwestern summer nights.

Update: Uri Wilensky has a firefly synchronization applet, and it's practically in Lisp.

Update: Pretty much right after I posted this I realized the color was off, so there's a new version now with yellower flashes. And more realistic firefly movement.

July 19, 2007

The Crazy

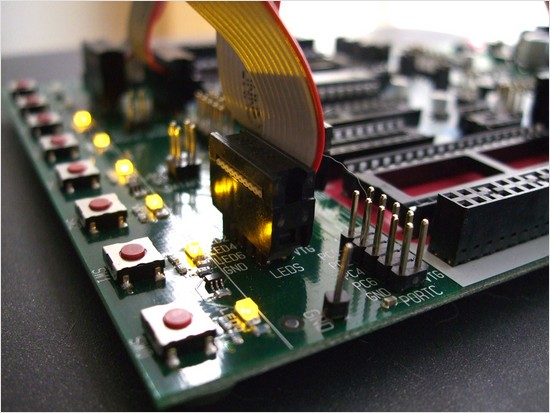

My new STK500 microcontroller development board.

This business of making little machines that interact with the physical world is addicting. I can feel the crazy coming on, and I like it.

July 17, 2007

DARPA Urban Challenge Site Visits

DARPA will visit and evaluate 53 teams for the upcoming Urban Challenge, but probably only last year's winning team gets their site visit written up in Wired and Spectrum.

From Wired:

“It drives like my grandma,” exclaimed one bystander, as Junior cautiously pulled up to an intersection, turned on its blinker, waited ten seconds, and then pulled cautiously and jerkily around the curve.

[...]

During the course of the morning, the Stanford team members put Junior through its paces before the watchful eyes of DARPA. First they tested Junior's ability to stop on demand, by remote control. Then the car navigated itself through a simple course marked out with yellow lines and orange cones, stopping at intersections indicated by white bars on the pavement. The next test involved driving through the course and passing around stopped cars. And the fourth test required Junior to approach intersections, wait while another car drove through it, and then drive through the intersection itself.

“Negotiating an intersection is a complicated thing,” said Anya Petrovskaya, a PhD candidate in computer science at Stanford, and the team member who wrote the software responsible for calculating other vehicles' speed and direction. “People do all kinds of things like making eye contact and waving their hands, and Junior doesn't have any of that information.”

Junior is an exceedingly conservative driver, acknowledged Montemerlo. Partly because of that conservatism, Junior got stuck during the third trial, where it stopped dead while passing a parked car, unable to go forward because it had insufficient clearance between the other car and the edge of its “road.”

[...]

Team leader Sebastian Thrun envisions a day when humans are no longer required to drive their cars. “First of all, cars are unsafe. We kill something like 42,000 people per year, and most of those deaths are due to human error. Second, cars are inefficient. They require a lot of time and attention to drive... I think that autonomous cars will really change society.”

Spectrum has links to videos of the Stanford site visit.

Not every team's visit went as well.

July 16, 2007

Powerset Anti-hype

Jenny Holzer is the only person who should be allowed to use Twitter.

Powerset recently invited 40 people to their offices for a preview/demo/town meeting:

About 10 minutes in I thought that the crowd was being a little too easy on us and I asked the folks to step up and start asking some tough questions. WOW! did it start to get interesting from that moment forward and that's what made the meeting great at the end of the day.

ZDNet has a writeup:

Parsing pages is a scaling and potential performance problem. Newcomb said the barrier is getting it down to seconds per sentence–Wikipedia has 25 sentences on average per page. Powerset has made a big investment in a datacenter. “It takes us one second right now, and we are driving it down every month,” Newcomb said. At this point, Powerset has 720 Intel cores in its datacenter, Newcomb said, and is riding Moore's Law to improve economics and performance.

(one second per sentence sounds super slow to me, but what do I know.)

Even uncov was outrageously (for them) impressed:

Powerset and Meebo have both raised $12 million to fund their operation. Natural Language Search vs. AJAX+Gaim chatting. Which investment seems more justified to you?

I think Powerset has a great shot at success. Makes me wish I were obsessed with search in the way I'm obsessed with flying robots.

July 15, 2007

Do Lisp Programmers Really Exist?

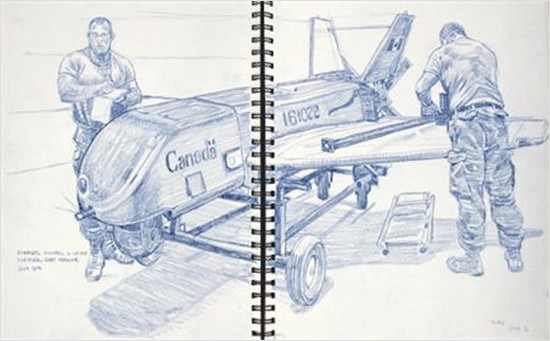

Richard Johnson is a reporter/artist in Kandahar.

Do Lisp Programmers Really Exist?

There have been several attempts at proving the existence of LISP programmers. However, these have suffered from a serious lack of standardization. Nobody could actually say what this LISP programmer is or how he looks like. In the end, through considerable effort, most partisans of the LISP programmer theory have reached a common conclusion:

- The LISP programmer is simple, without composition of parts, such as body and soul, or matter and form. Nevertheless, the LISP programmer is likely to take the shape of a parenthesis, should he incarnate.

- The LISP programmer is perfect, lacking nothing, and is completely distinguished from other beings.

- The LISP programmer is infinite. That is, he is not finite in the ways that created programs are physically or technically limited.

- The LISP programmer is immutable, incapable of change on his essence and character.

- The LISP programmer is one, without diversification. His essence is the same as his existence. However, despite being a single entity, he does have several persons belonging to the same being.

Immutable, you say.

July 10, 2007

Robot Blimp Pioneers

From Shorpy:

“Anthony's Wireless Airship.” A small powered blimp used in 1912 to demonstrate remote control of aircraft by wireless telegraphy. (“Professor Anthony has exhibited a method of airship control of his own by wireless. He and Leo Stephens recently gave an exhibition of starting, controlling, turning and stopping an airship by wireless which was quite a long distance from the station which controlled its action.”)

From “An Epitome of the Work of the Aeronautic Society [of New York] from July, 1908, to December, 1909”, describing an Aeronautical Evening (an offshoot of the Automobile Club, apparently) in 1909:

Hudson Maxim, the famous inventor, gave his views on the future of the flying machine in war. The Hon. Col. Butler Ames, M.C., described, and for the first time showed photographs and moving pictures of, his new machine, and his experiments at the Navy Yard, Washington, and on the Potomac River. M. O. Anthony gave a demonstration of his remarkable invention for the control of airships by means of wireless telegraphy. The evening closed with a fine display of moving pictures of machines in flight, the first display of the kind ever made in this country. A unanimous vote was passed urging Congress to appropriate generous sums for the development of aeronautics for the Army.

These guys were the Homebrew Computer Clubbers and Makers of their time.

And check this out, from the same page:

Lesh brought his glider to the exhibition, and made a number of fine glides, towed by a horse and also by an automobile. It was his purpose in his last flight, in an endeavor to win the Brooklyn Eagle gold medal, to cut the tow-line when he had reached a sufficient height. He did so, but the crowd got in his way, and hampered him in landing. He fell and broke his right ankle.

Unfortunately, the fractures were badly set at the Fordham Hospital, and later it became necessary to place Lesh under the care of a specialist at Hahnemann Hospital. The plucky boy had a bad time for a long while. But he is now well again, and though slightly lames, Dr. Geo. W. Roberts gives every assurance that eventually he will be all right. On his reappearance at a meeting of the Society, Lesh was at once unanimously elected a Complimentary member, and he is now again taking an active part.

There is an RC plane field not far from me with a web discussion board that, 100 years later, reads similarly to this, with the showing off of new stuff to each other, recounting of failures, and even some serious injuries (with subsequent attempts to call for aid via wireless). I bet the monoplane enthusiasts and the dirigible gentlemen of the turn of the century occasionally lost their tempers and called each other names, in the same way the modern RCers divide themselves into quarreling factions based on whether they fly helicopters or electric planes or models of military aircraft.

It might only be in contemporary SoCal, though, where someone will pull a knife on you for landing your wireless airship against the traditional field pattern.

July 02, 2007

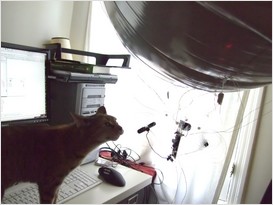

Blubber Bots at Machine Project

Saturday I and a half-dozen or so other people built Blubber Bots at a Machine Project workshop, led by Jed Berk (the Blubber Bots are descended from his ALAVs, previously on lemonodor). It was so fun! And my blimp is my new favorite robot.

Flying robots really are the best. They don't care about stairs or raised floor thresholds (poor Roomba). A small blimp makes an excellent indoor robot pet platform, and Jed's design makes the most of it. I always wondered what kind of control authority a blimp would have, and while the Blubber Bot is kind of like a clumsy airborne manatee, it's actually pretty good about seeking out light and backing away from obstacles that trigger its pink pom pom-tipped bump sensor. When it detects a cell phone call (the phone has to be within a few inches of the sensor) it goes into dance mode, spinning in place and playing a tune. I spent 45 minutes just watching the thing fly around my apartment—more than any other robot I've worked with it's very easy to anthropomorphize.

More photos here and here. Kits are available from Make.